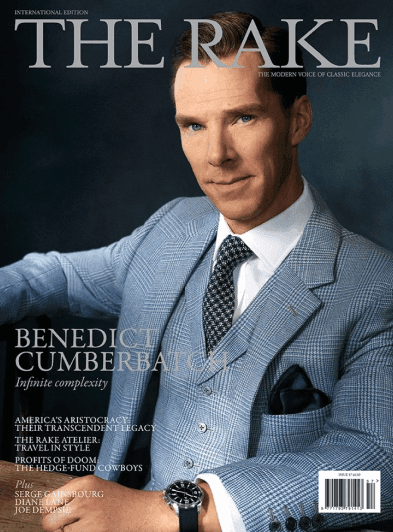

The Rake - High-quality clothing store

How it all started?

When we took over the project, The Rake has already had a Shopify-based online store and a blog on Wordpress for almost a year. The blog was set up on the main domain whereas the subdomain was directing to the Shopify platform.

Observation

At first glance, both solutions had a similar design, but once you started browsing it, the differences were bold. Keeping their look similar meant all the work on the design was always doubled as Shopify and Wordpress couldn't share a template. As a result, besides similar design, there was no connection between these two platforms.

Blog's articles rarely mentioned products from the online store catalog, and if they did, it required a lot of editor's manual work to find and paste the correct URLs or to add product's pictures. Any changes to the stock level or URLs also had to be reflected in the articles.

As a result, even though the blog was gaining a lot of attention (over a thousand people on the website daily), the conversion rate was unsatisfactory.

Idea

At first glance, both solutions had a similar design, but once you started browsing it, the differences were bold. Keeping their look similar meant all the work on the design was always doubled as Shopify and Wordpress couldn't share a template. As a result, besides similar design, there was no connection between these two platforms.

Blog's articles rarely mentioned products from the online store catalog, and if they did, it required a lot of editor's manual work to find and paste the correct URLs or to add product's pictures. Any changes to the stock level or URLs also had to be reflected in the articles.

As a result, even though the blog was gaining a lot of attention (over a thousand people on the website daily), the conversion rate was unsatisfactory.

Searching for the right tech approach

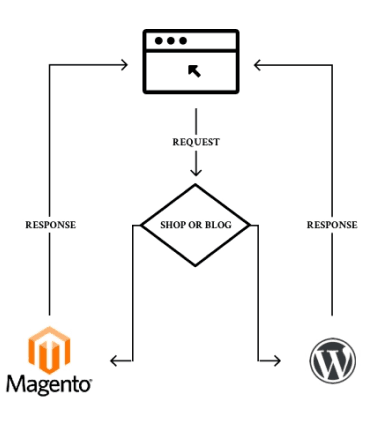

Both Magento and Wordpress have built-in rendering engines, but they aren't compatible with each other. We had three options to choose from:

- 1Build two separate themes, each for every platform, and make sure they look alike.

- 2Create a separate front-end application that would connect to and retrieve data from both platforms.

- 3Use one platform as a front-end and build a connector to the other one.

Technical overview

We decided to keep this solution and build an ecommerce website to extend the blog's functionalities. We agreed that the best option would be to use Magento 2 which was released earlier that year.

Option 1

It was clear to us that the first option, while the easiest, would be challenging to maintain in the long term. Our work would be doubled as every change to the front-end part would require changes to both themes.

What's more, the HTML structures in Magento and Wordpress are different, so it'd be almost impossible to maintain the same look in both themes.

There was another disadvantage of such an approach. All the data would have to be retrieved twice - for the blog and the online shop

.

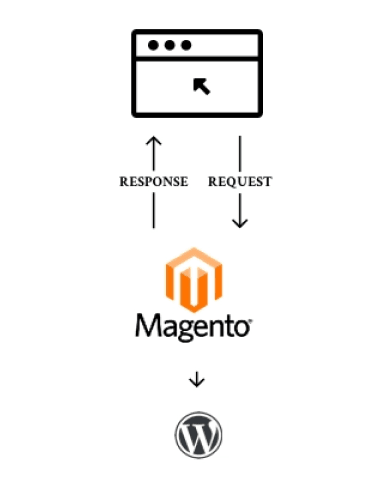

Option 2

The second solution doesn't have the problem described above, but it also wasn't ideal for us. As Magento is an advanced application, it would be the best option for the store's front-end. It would connect to Wordpress only to fetch editorial content, so one theme would be enough.

However, to prepare data for it, we would need to make an HTTP request in the background during rendering, build a synchronizer that would push data from Wordpress to Magento or create a way for Magento to use Wordpress tables directly.

Only the third option seemed viable, but even in this case, adding any new feature to Wordpress would require changes in Magento’s front-end.

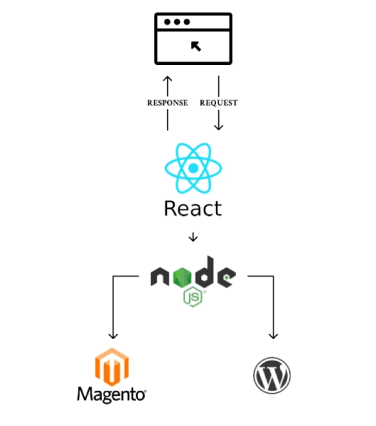

Option 3

The third approach was the most challenging one from a technological perspective, but it was the best option for maintenance and future development.

It required more work from the front-end team as the whole application had to be built from scratch. However, separating the front-end and back-end parts provided us with many advantages.

The one that was crucial from our perspective meant that any changes made to the internal structure or logic of one application didn’t have to be mirrored in the other one anymore. The front-end application would use REST APIs of both platforms, as they don't change between upgrades; thus, we could rely on them.

Final Decisions

Once we decided to build a separate front-end application, we had to pick the right technology for it.

Since the stack was growing in complexity, we wanted to make it as fast and light as possible. Hence a Javascript framework was selected as it provided us with a fast and responsive front-end, mobile-first approach and cross-browser compatibility. Besides, PWA technology was gaining a lot of traction, so we knew that was the right solution to invest in.

Technical Overview

We used React for the client-side application with the server-side rendering support, and Node.js with Express served us as a middleware retrieving data from both back-ends. This setup allowed us to connect safely to endpoints that required admin privileges. Without it, we would need to expose the admin token to the user’s browser.

At this point, it was safe to say that our initial assumptions proved to be correct. We’ve built a stable and swift front-end application that could retrieve data no matter where they were stored. We also could easily develop new features for Wordpress and Magento separately, knowing that implementing them in one platform won't affect the other.

Another challenge on the horizon

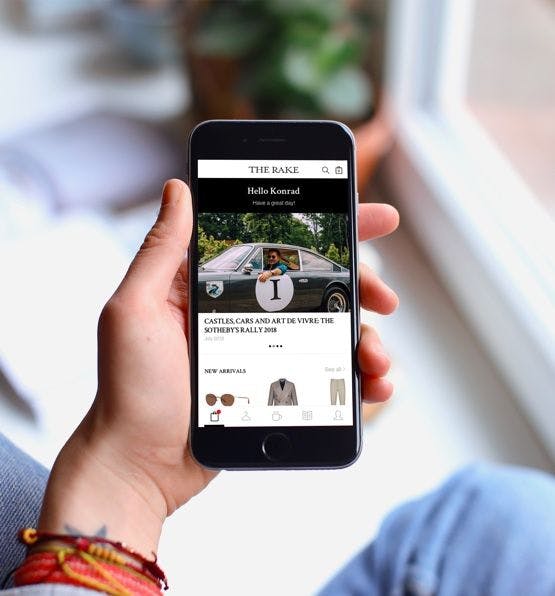

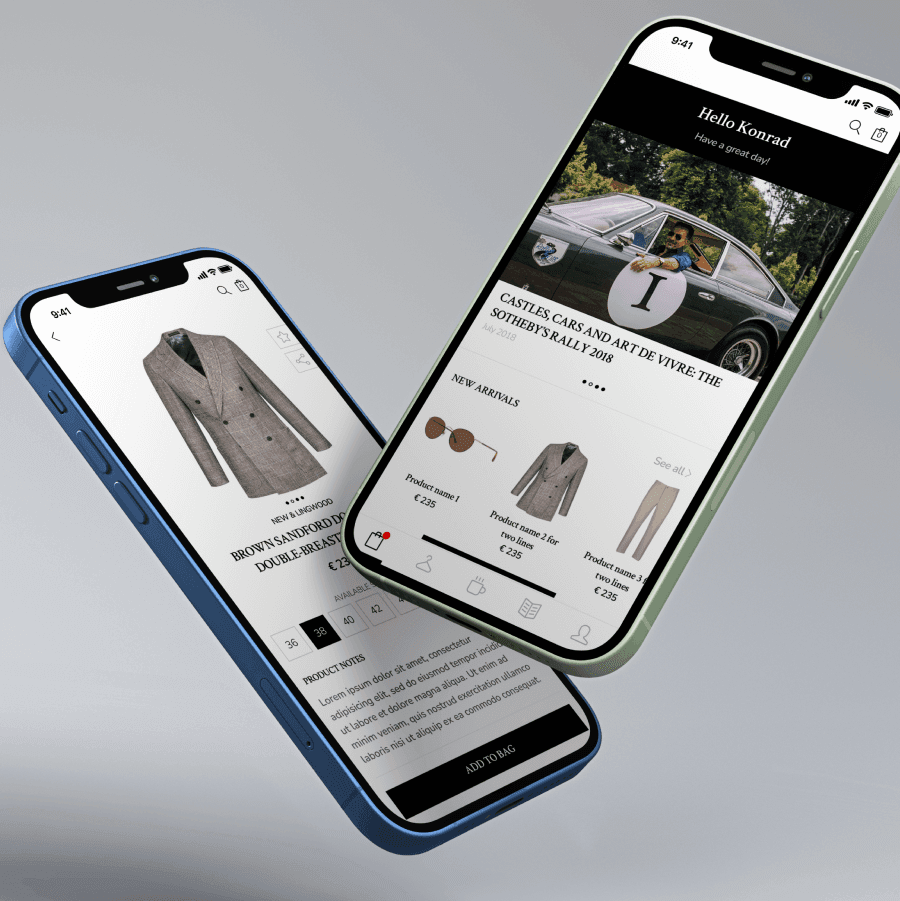

At one point, the Client decided that having a native smartphone app would drive more sales.

At one point, the Client decided that having a native smartphone app would drive more sales.

Despite the growing popularity of the PWA approach in the Android world, Apple was reluctant to provide support for the new features.

Service worker support was added with the upgrade to iOS 11.3 in March of 2018 but with a limited set of features. Therefore, we decided to focus on iOS and built an app for this system.

How did we do it

Technical overview

Firstly, we had to investigate how to insert a completely new point of sale into our stack.

The application had to get catalog data from Magento, editorial data from Wordpress, and provide a smooth and seamless shopping experience at the same time. We knew that to have it all under control, orders have to end up in Magento, where the fulfillment process would take place. The easiest option was to use the Magento shopping cart feature, which would gather all data required to create a new order.

It also solved the problems with fetching the payment and shipment methods because Magento requires a saved cart to prepare those data.

The editorial content also hid some surprises. The native app didn't use the HTML as a primary rendering engine. However, Wordpress stored all posts in the MySQL database already in the HTML format. Furthermore, we had several custom plugins helping the content editors to insert products data into blog posts. The plugins were injecting smart tags into the content. Those tags were converted into HTML in the front-end application, which retrieved additional data.

Problem

The additional problem that we had to face was the speed of the current stack. We fetched catalog and blog data directly from the back-ends. It worked well with Wordpress, but Magento proved to be a little slow. We've already been using a caching mechanism in our Node.js middleware layer to increase the stack speed.

Unfortunately, the first request was visibly slower, especially for Magento's data. Cache validation also turned out to be challenging to manage. We could send a “cache clear” request only after the product data changed. However, this logic could lead to incorrect data being stored in the cache. When the front-end requested data, it could not be available in the index tables yet.

Technical overview

At that point, we’ve started to look for a possible solution that would help us to resolve those issues.

Keeping in mind our previous experiences, we first looked into a search engine. Moving all data into single storage made the stack more complex, but with the new requirements we faced, the benefits of such a solution outweighed the costs.

It gave us a single place where we could fetch data from and provided with fast and advanced search options. Since we have already used Algolia as the search engine, we've confirmed that this approach worked well. The question was whether to extend data we already stored in Algolia or to set up our own Elasticsearch server. The calculation of costs turned out in favor of setting up the search engine server in house by our DevOps team. There were two reasons for that: no simple way to extend the Algolia modules and the cost of this service.

The pricing of the Agolia service has two tiers, starter and pro. Each tier is limited by numbers of API requests (that includes both the search as well as index updates) and index records. The starter is inexpensive but has low limits for both quotas, and going above them can quickly raise the monthly cost. Pro version gives extended options, but it's also reflected in its pricing. As there is no middle option, it would be hard to manage it in some cases. However, we also wouldn't utilize the Pro version.

Another issue was its extensibility.

Algolia provides modules to integrate both Magento and Wordpress with their service, and we were already using the one dedicated to Magento.

It's also possible to extend these modules as they’re not encoded in any way. It’s not the easiest task to hook into them, though.

At the same time, we found another module to integrate Magento with Elasticsearch. This one provided its own XML configuration files that allowed to add separate indexes or to provide additional data to the existing ones easily.

As for the Wordpress integration with Elasticsearch, there were several options. We decided to use one of the most popular, i.e. ElasticPress, which in our initial tests proved to work correctly. High extensibility wasn't a priority in this case because we didn’t require additional data for the editorial content.

Migrating from React to Vue

After a few years, with long lines of code being the result of our experimental approach at the beginning of the project, we decided it's high time for refactoring. We also realized it would simplify the maintenance in a longer perspective.

To make sure the whole technical debt goes away, we chose a completely different front-end library. The drawback was that migration forced us to rewrite the project. Nevertheless, within just a few months we deployed an entirely redone website.

Final Stack

In the end, our new application structure was built as follows:

Magento 2 was used as the catalog, cart, checkout, and customer management back-end.

Wordpress was used as a back-end to manage the editorial content.

ElasticSearch server was used as a data source for both editorial content and catalog data.

Node.js application was used as a middleware layer between the front-end app and backends.

Web frontend UI was written in Vue.js

The smartphone app for iOS was built in Swift

Wordpress integration with Elasticsearch required only a few adjustments.

We decided to move the middleware layer to a separate Node.js application. We did it to manage better changes in the middleware required by two separate front-ends.

There's no surprise that the most time-consuming part was building the iOS app because we had to do it from scratch.

Magento integration with Elasticsearch was also an absorbing task as we decided to push as much data as possible to its database. By doing it, we wanted to speed up the page and remove caching in the Node.js.

Elasticsearch server worked so well that it didn't require any additional cache in the middleware.

This is why we decided to keep there not only the catalog but also the stock, directories, URLs mapping, Magento CMS blocks, and indexed tax rates data.

All the data was quickly attainable from any front-end terminal.

Thanks to that, our dependence on Magento 2 API layer was reduced only to the cart, checkout, and account management.

It had two major benefits:

- 1The other big win of this approach is the reduction of maintenance time during a deployment.

- 2The first one was a page speed increase when the average request time for the category list or the product page dropped from around 800-1000ms to just 200-400ms.

Previously, any change that had to be deployed to Magento caused 2-3 minutes of downtime in its REST service.

But with all catalog data being stored separately, the customer can easily browse the editorial content and the store during a deployment.

The only elements that are offline during an update are the cart and the checkout, but we prepared a waiting screen for customers. It reloads itself once the deployment process is completed.

This setup is also very helpful in debugging. In case of any back-end issues, we can safely put it into a maintenance mode and still have the most of the page usable.

Along with building a new channel with the iOS app, we decided to rewrite the front-end side as well.

As mentioned earlier, we decided to separate the node.js middleware layer used previously by the React-based page, to better manage changes.

It also allowed us to rebuild the front-end app, which has accrued technical debt with time. Updating the React to its newest version also turned out to be challenging due to the changes it underwent.

Analytics

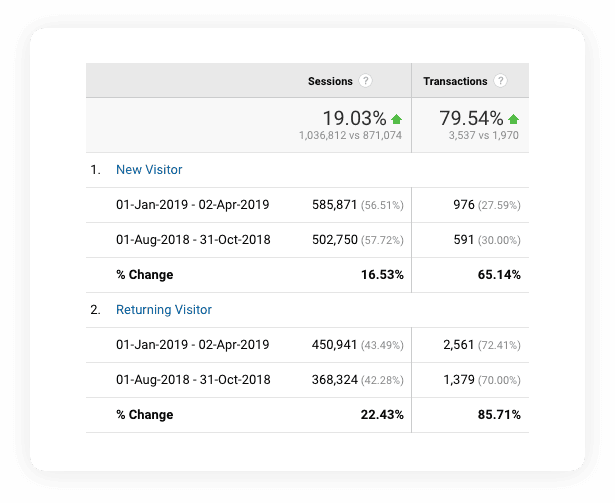

We compared the data from the period August to October 2018 (3 months before the change) and January to March 2019 (3 months after the change). We didn’t include November and December on purpose - it’s the high season so the presented data would be skewed.

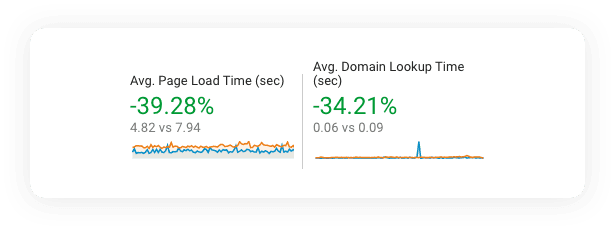

The average Page Load Time

dropped by 39% (7.9s to 4.8s)

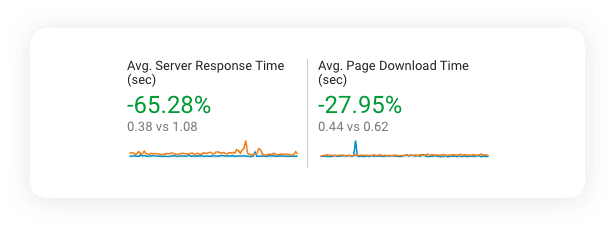

The average Server Response Time

dropped by 65% (1.08s to 0.38s)

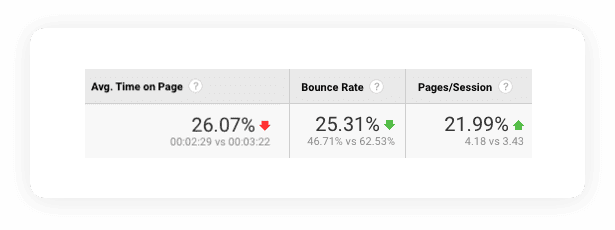

The average time on page

Decreased by 26% (3m 22s to 2m 29s) but, at the same time, the bounce rate also decreased by 25% (62.5% to 46%)

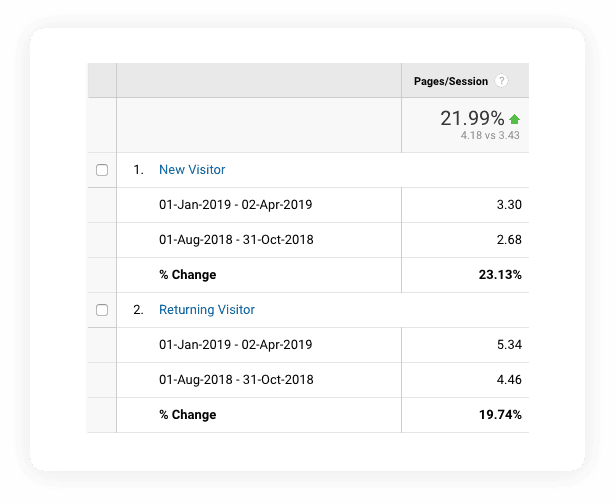

The average number of pages viewed in a session

Increased by 22% (3,43 to 4,18 pages per session)

Session

Increased by 19% and sessions with transactions by 79%

The average conversion rate recorded in the period from the 1st September 2019 to 10th November 2019 increased by 78%, comparing to the period from August to October 2018 (however, it should be noted that data from 2019 was gathered after 8 months of a paid Google Ads campaign).

Would you like to create something similar and innovate your ecommerce project with Hatimeria?

Similar Case Studies

Towels - Warmth at your fingertips from Hyvä Themes

Our Latest Thinking